Hi everyone!

What happened?

We have switched to pomerium all-in-one with kubernetes CRD for both HTTPS and TCP rules.

Since then, RAM usage has skyrocketed and pods take a long time to come online.

Persistence is configured with postgres.

If the TCP rules are removed from the CRD, RAM usage is fine and startup times are also fine.

What’s your environment like?

- Pomerium version (retrieve with

pomerium --version): - Server Operating System/Architecture/Cloud:

What’s your config.yaml?

using CRD

What did you see in the logs?

During runtime, this error message is printed in several occurences:

“Deprecated field: type envoy.type.matcher.v3.RegexMatcher Using deprecated option \‘envoy.type.matcher.v3.RegexMatcher.google_re2\’ from file regex.proto. This configuration will be removed from Envoy soon. Please see Version history — envoy 1.37.0-dev-5742b1 documentation for details. If continued use of this field is absolutely necessary, see Runtime — envoy 1.37.0-dev-5742b1 documentation for how to apply a temporary and highly discouraged override.”

no other errors are printed.

Additional context

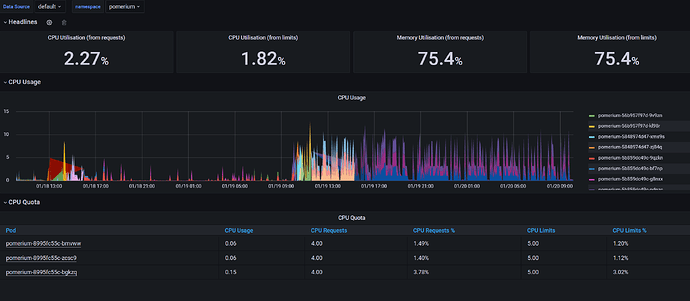

Please find attached the monitoring screenshots.